It is the mark of clear thinking and good rhetorical style that when I start a sentence, I finish it in a suitable, grammatical fashion. A complete sentence is synonymous with a complete thought. In the world of AI, completing sentences has become the starting point for the Large Language Models which have started talking back to us. The problem that the neural networks are quite literally being employed to solve is “what word comes next”? Or at least this is how it was explained in Steven Johnson’s excellent Times article on the recent and frankly impressive advances made by the Large Language Model, GPT-3, created by OpenAI.

As is apparently necessary for a Silicon Valley project founded by men whose wealth was accrued through means as prosaic as inherited mineral wealth and building online payment systems, OpenAI sees itself on a grand mission for both the protection and flourishing of mankind. They see beyond the exciting progress currently being made in artificial intelligence, and foresee the arrival of artificial general intelligence. That is to say that they extrapolate from facial recognition, language translation, and text autocomplete, all the way to a science fiction conceit. They believe they are the potential midwives to the birth of an advanced intelligence, one that we will likely struggle to understand, and should fear, as we would a god or alien visitors.

What GPT-3 can actually do is take a fragment of text and give it a continuation. That continuation can take many precise forms: It can answer a question. It can summarize an argument. It can describe the function of code. I can’t offer you any guarantee that it will actually do these things well, but you can sign up on their website and try it for yourself. The effects can certainly be arresting. You might have read Vauhini Vara’s Ghosts in the Believer writing about her sister’s death. She was given early access to the GPT-3 “playground” and used it as a kind of sounding board to write honestly about the death of her sister. You can read the sentences that Vara fed the model and the responses offered, and quickly get a feeling for what GTP-3 can and can’t do.

—

It will be important for what I am about to say that I explain something to you of how machine learning works. I imagine that most of you reading this will not be familiar with the theory behind artificial intelligence, and possibly intimidated. But at it’s core it is doing something quite familiar.

Most of us at school will have been taught some elementary statistical techniques. A typical exercise involves being presented with a sheet of graph paper, with a familiar x and y axis and a smattering of data points. Maybe x is the number of bedrooms, and y is the price of the house. Maybe x is rainfall, and y is the size of the harvest. Maybe x is the amount of sugar in a food product, and maybe y is the average weekly sales. After staring at that cluster of points for a moment, you take your pencil and ruler and set down a “line of best fit”. From the chaos of those disparate, singular points on the page, you identified a pattern, a correlation, a “trend”, and then impose a straight line — a linear structure — on it. With that line of best fit drawn, you could then start making predictions. Given a value of x, what is the corresponding value of y?

This is, essentially, what machine learning and neural networks do.

The initial data points are what is referred to as the training data. In practice however, this is done in many more than two dimensions — many, many more, in fact. As a consequence, eyeballing the line of best fit is impossible. Instead, that line is found through a process called gradient descent. Taking some random choice of line as a starting point, small, incremental changes are made to the line, improving the fit with each iteration, until that line arrives in a place close to the presumed shape of the training data.

I say “lines”, but I mean some kind of higher dimensional curves. In the simplest case they are flat, but in GPT-3 they will be very curvy indeed. Fitting such curvy curves to the data is more involved, and this is where the neural networks come in. But ultimately all they do is provide some means of lining up, bending, and shaping the curves to those data points.

You might be startled that things as profound as language, facial recognition, or creating art, might all be captured in a curve, but please bear in mind that these curves are very high dimensional and are very curvy indeed. (And I’m omitting a lot of detail). It is worth noting that the fact we can do this at all has required three things: 1) Lots of computing power 2) Large, readily available data sets 3) a toolbox of techniques and heuristics and mathematical ideas for setting the coefficients that determine the curves.

—

I’m not sure that the writing world has absorbed the implications of what this all means. Here is what a Large Language Model could very easily do to writing. Suppose I write a paragraph of dog-shit-prose. Half baked thoughts put together in awkwardly written sentences. Imagine that I highlight that paragraph, right click, and somewhere in the menu that drops down there is the option to rewrite the paragraph. Instant revision with no new semantic content added. Clauses are simply rearranged, the flow is adjusted with mathematical precision, certain word choices are reconsidered, and suddenly everything is clear. I would use it like a next level spell checker.

And that is not all: I could revise the sentence into a particular prose style. Provided with a corpus of suitable training data I could have my sentences stripped of adjectives and set out in terse journalistic reportage. Or maybe I opt for the narrative cadence of David Sedaris. So long as there is enough of their writing available, the curve could be suitably adjusted.

In his Times article, Johnson devotes considerable attention to the intention and effort of the founders to ensure that OpenAI is on the side of the angels. They created a charter of values that aspired to holding themselves deeply responsible for the implications of their creation. A charter of values which reads as frankly, and literally hubristically, as they anticipate the arrival of Artificial General Intelligence, while they fine tune a machine which can convincingly churn out shit poetry. Initially founded as a non-profit, they now have birthed the for-profit corporation OpenAI LP, but made the decision to cap the potential profits for their investors: Microsoft looming particularly large.

But there was another kind of investment made in GPT-3. All the collected writings that were scraped up off the internet. The raw material that is exploited by the gradient descent algorithms, training and bending those curves to the desired shape. Ultimately, it is true that they are extracting coefficients from all that text-based content, but it is unmistakable how closely those curves hew, in their abstract way, to the words that breathed life into them. They actually explain that unfiltered internet content is actively unhelpful. They need quality writing. Here is how they curated the content in GPT-2:

Instead, we created a new web scrape which emphasizes document quality. To do this we only scraped web pages which have been curated/filtered by humans. Manually filtering a full web scrape would be exceptionally expensive so as a starting point, we scraped all outbound links from Reddit, a social media platform, which received at least 3 karma. This can be thought of as a heuristic indicator for whether other users found the link interesting, educational, or just funny.

(From Language Models are Unsupervised Multitask Learners)

For all of Johnson’s discussion of OpenAI’s earnest proclamations of ethical standards and efforts to tame the profit motive, there is little discussions of how the principal investment that makes the entire scheme work is the huge body of writing available online that is used to train the model and fit the curve. I’m not talking about plagiarism; I’m talking about extracting coefficients from text, that can then be exploited for fun and profit. Suppose my fantasy of a word processor tool that can fix prose styling becomes true. Suppose some hack writer takes their first draft and uses a Neil Gaiman trained model to produce an effective Gaiman pastiche that they can sell to a publisher. Should Gaiman be calling his lawyer? Should he feel injured? Should he be selling the model himself? How much of the essence of his writing is captured in the coefficients of the curve that was drawn from his words?

Would aspiring writers be foolish not to use such a tool? With many writers aiming their novels at the audiences of existing writers and bestsellers, why would they want to gamble with their own early, barely developed stylings? Will an editor just run what they write through the Gaiman/King/Munroe/DFW/Gram/Lewis re-drafter? Are editors doing a less precise version of this anyway? Does it matter that nothing is changing semantically? Long sentences are shortened. Semi-colons are removed. Esoteric words are replaced with safer choices.

The standard advice to aspiring writers is that they should read. They should read a lot. Classics, contemporary, fiction, non-fiction, good, bad, genre, literary. If we believe that these large language models at all reflect what goes on in our own minds, then you can think of this process as being analogous to training the model. Read a passage, underline the phrases you think are good, and leave disapproving marks by the phrases that are bad. You are bending and shaping your own curve to you own reward function. With statistical models there is always the danger of “over-fitting the data”, and in writing you can be derivative, an imitator, and guilty of pastiche. At the more extreme end when a red capped, red faced, member of “the base” unthinkingly repeats Fox News talking points, what do we have but an individual whose internal curve has been over-fitted?

It is often bemoaned that we live in an age of accumulated culture, nostalgia, retro inclination. Our blockbusters feature superheros created in a previous century. There is something painfully static and conservative about it all. But what if artificial intelligence leads us down the road to writing out variations of the same old sentences over and over again?

—

In Barthes’ essay The Death of the Author he asserts

We know now that a text is not a line of words releasing a single ‘theological’ meaning (the ‘message’ of the Author God) but a multi-dimensional space in which a variety of writings, none of them original, blend and clash. The text is a tissue of quotations drawn from the innumerable centers of culture.

Given that Barthes was quite likely half-bullshitting when he threw out phrases like “multi-dimensional space”, what I have quoted above is a disturbingly accurate description of the workings of a large language model. But it isn’t describing the large language model. It is a description of how we write. Barthes continues:

the writer can only imitate a gesture that is always anterior, never original. His only power is to mix writings, to counter the ones with the others, in such a way as never to rest on any one of them. Did he wish to express himself, he ought at least to know that the inner ‘thing’ he thinks to translate’ is itself only a ready-formed dictionary, its words only explainable through other works, and so on indefinitely.

Maybe Gaiman would have no more cause to call his lawyer than all the great many writers he absorbed, reading and then imitating in his youth and early adulthood. Maybe if Barthes is to be believed here, the author is dead and the algorithm is alive. Our creativity is well approximated by a very curvy curve in a high dimensional space.

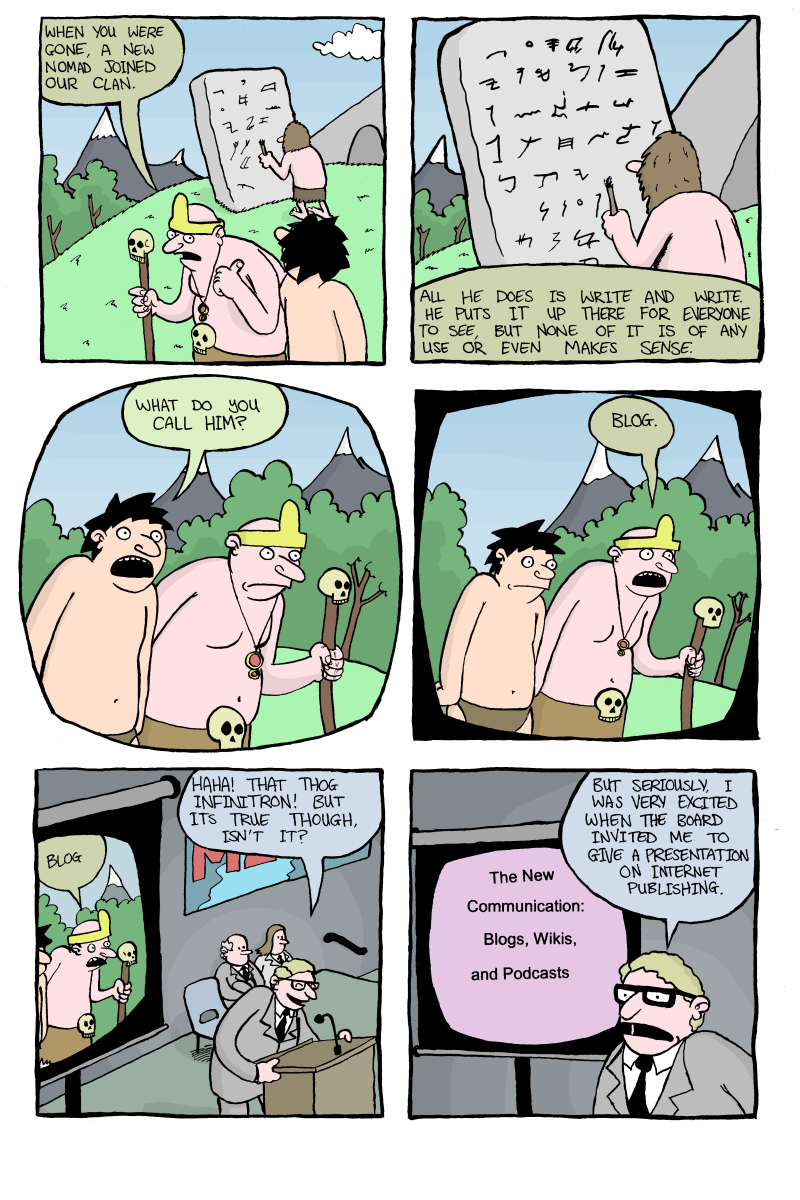

One potential outcome that does not seem to have been considered by our Silicon Valley aristocracy is that the Artificial General Intelligence they bring into this world will be an utterly prosaic thinker with little of actual interest to say. It shouldn’t stop it from getting a podcast, though.

Or maybe Barthes was wrong and maybe Large Language Model will continue to be deficient in some very real capacity. Maybe writers do have something to say, and their process of writing is their way of discovering it. Maybe we don’t have to consider a future where venture capitalists have a server farm churning out viable best-sellers in the same fashion they render CGI explosions in the latest Marvel movie. Maybe we should get back to finishing the next sentence of our novel because the algorithm won’t actually be able to finish it for us. Maybe.